The SX67X0 systems provide the highest performing fabric solution in a 1U form factor by delivering up to 4Tb/s of non-blocking bandwidth with 200ns port-to-port latency. These systems are the industry's most cost-effective building blocks for embedded systems and storage with a need for low port density systems. Whether looking at price-to-performance or energy-to-performance, these systems offer superior performance, power and space, reducing capital and operating expenses and providing the best return-on-investment. The systems are an ideal choice for smaller departmental or back-end clustering uses with high performance needs, such as storage, data base and GPGPU clusters. Powerful servers combined with high-performance storage and applications that use increasingly complex computations are causing data bandwidth requirements to spiral upward. As servers are deployed with next generation processors, High-Performance Computing (HPC) environments and Enterprise Data Centers (EDC) need every last bit of bandwidth delivered with Mellanox’s FDR InfiniBand systems.

Built with Mellanox’s sixth generation SwitchX®-2 InfiniBand FDR 56Gb/s system device, these standalone systems are an ideal choice for top-of-rack leaf connectivity or for buildingsmall to extremely large sized clusters. These systems enable efficient computing with features such as static routing, adaptive routing, and advanced congestion management. These features ensure the maximum effective fabric bandwidth by eliminating congestion. The managed systems comes with an onboard subnet manager, enabling simple, out-of-the-box fabric bring-up for up to 648 nodes. MLNX-OS® software delivers complete chassis management of firmware, power supplies, fans, ports and other interfaces. Mellanox’s edge systems can also be coupled with Mellanox’s Unified Fabric Manager (UFM®) software for managing scale-out InfiniBand computing environments. UFM enables data center operators to efficiently provision, monitor and operate the modern data center fabric. UFM boosts application performance and ensures that the fabric is up and running at all times. InfiniBand systems come as internally or externally managed. Internally managed systems come with a CPU that runs the management software (MLNX-OS®) and management ports which are used to transfer management traffic into the system. Externally managed systems come without the CPU and management ports and are managed using firmware tools. Mellanox's InfiniBand to Ethernet gateway, built with Mellanox's SwitchX®-2 based systems, provides the most cost-effective, high-performance solution for data center unified connectivity solutions. Mellanox's gateways enable data centers to operate at up to 56Gb/s network speeds while seamlessly connecting to 1, 10 and 40GbE networks with low latency (400ns). Existing LAN infrastructures and management practices can be preserved, easing deployment and providing significant return-on-investment.

This device is perfect for:

Boost your network's speed and reliability with brand-new devices. It's not just an upgrade, it's a solid foundation for your infrastructure needs, now and in the future. Enjoy faster connections for all your devices today, and rest easy knowing your network is ready for whatever comes next.

Your product will arrive packaged carefully, according to internal standards of item protection. The item is handled professionally, you can be sure that your order will arrive in perfect condition.

Thanks to our own fleet of vehicles, we are able to ensure fast and reliable delivery of very large goods, such as complete server systems and data centers. We offer individual service and full control over the transport of your goods. Trust us to take care of your transport needs and deliver your products on time within Europe

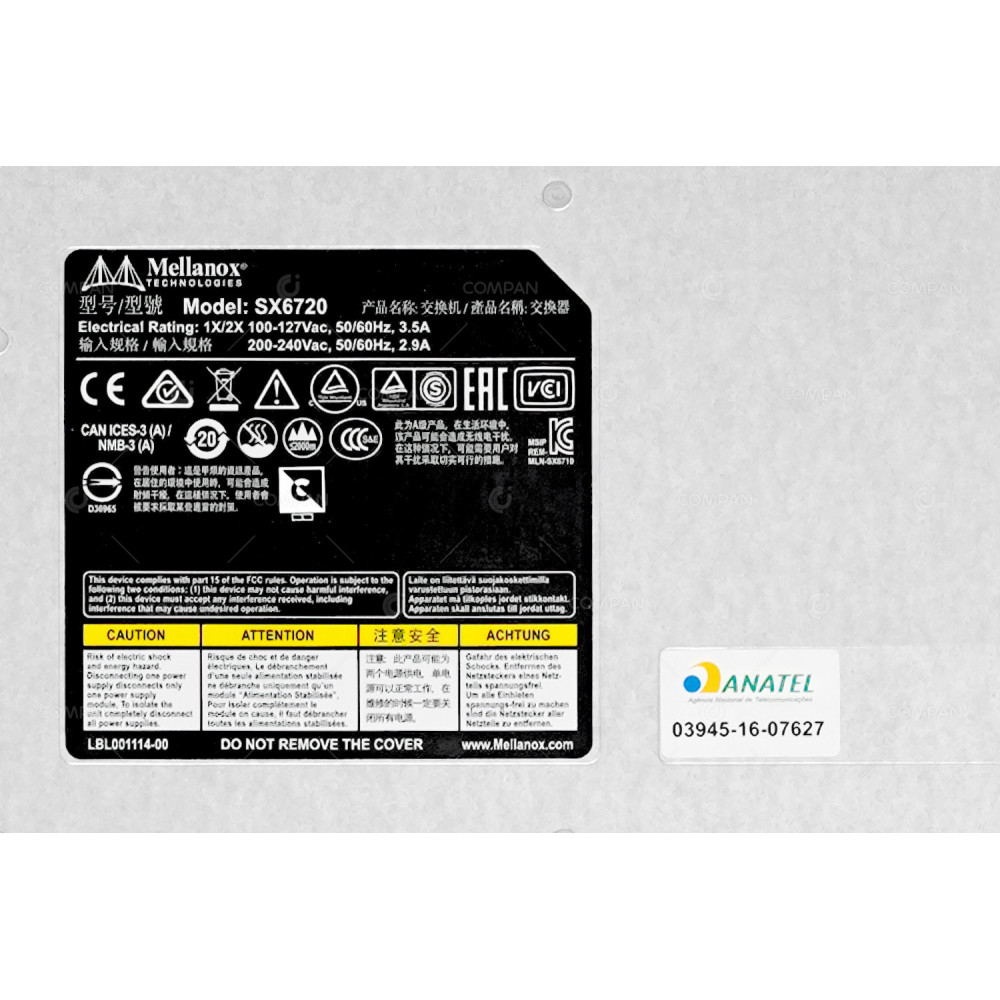

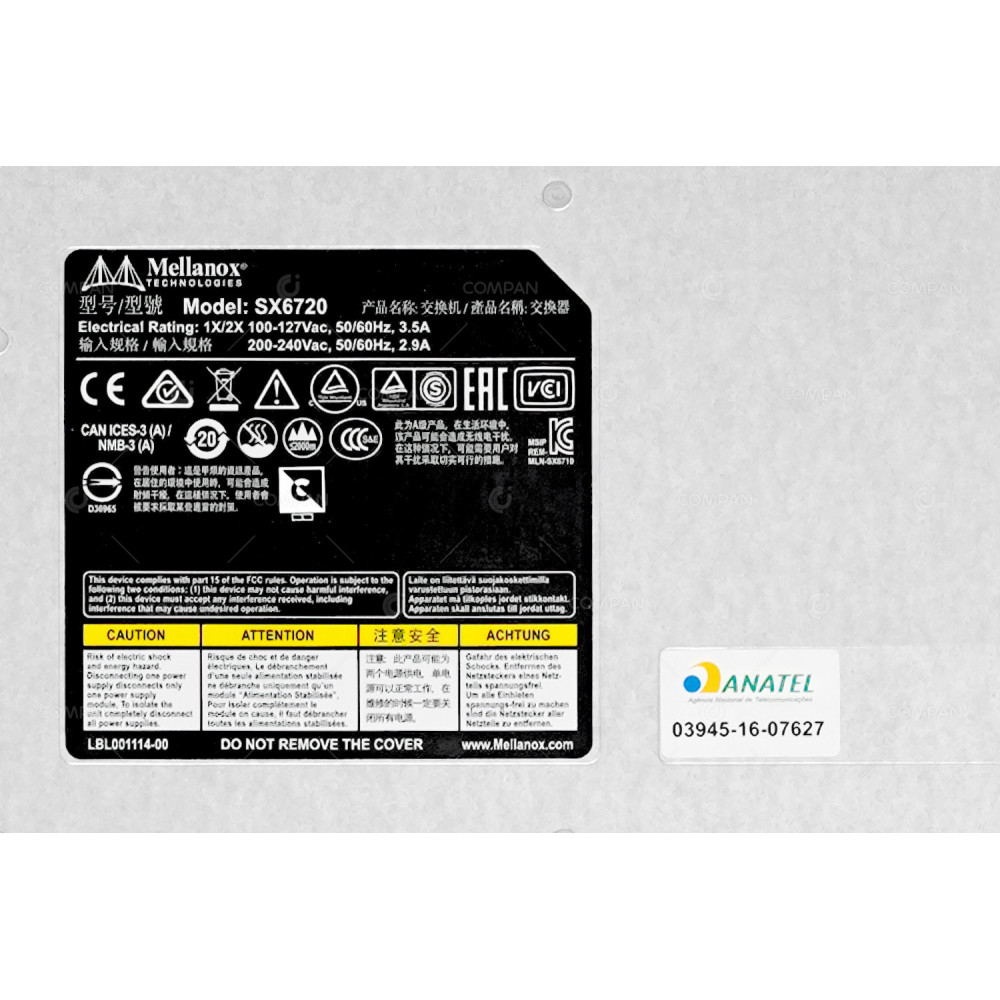

| Manufacturer | Mellanox |

| Product Line | SX6000 Family |

| Model | SX6720 |

| Ports | 36 x 56Gb QSFP+ |

| Management | Fully Managed |

| Stackable | No |

| Internal Ports | Not Applicable |

| Form Factor | 1U Rack Mountable |

| Layer | 2 |

| POE Standard | No |

| POE Power | N/A |

| Switching Capacity | 2.88 Tbps |

| LICENSE | - |

| Mounting | NO |

| PSU | 2x 400W (PN: 98Y6356) |

| Cooling | 4x Front-to-Rear, Redundant Fans (PN: 98Y6358) |

| Condition | A used item that has been kept in perfect conditions in a large data center. Looks like new, with no signs of use. Comes from a legitimate source. |

| Warranty | 1 Year |

| Feature / Characteristic | Specification |

|---|---|

| InfiniBand Ports | 36 (QSFP+) |

| InfiniBand Speed (per port) | 56 Gb/s (FDR) |

| Flexible QSFP Breakout Support | Yes |

| Serial Console Port | 1 x RJ-45 |

| Management Ethernet Port | 1 x 10/100/1000Mb RJ-45 |

| USB Ports | 1 x USB |

| Switching Throughput (Aggregate) | 4.032 Tb/s (non-blocking) |

| Latency (Port-to-Port) | ~200 ns |

| ECMP (Equal-Cost Multi-Path) Support | Yes (for InfiniBand routing, including adaptive routing) |

| Power Supplies | 2 (1+1 redundant, hot-swappable) |

| Fans | N+1 redundant, hot-swappable |

| Airflow Direction | Rear-to-Front (standard) |

| Height (RU) | 1 RU |

| Mounting | 19" Rack Mount Brackets (included) |

| Software | NVIDIA MLNX-OS |

| Minimum Software Version | Specific Infiniband OS release supporting FDR |